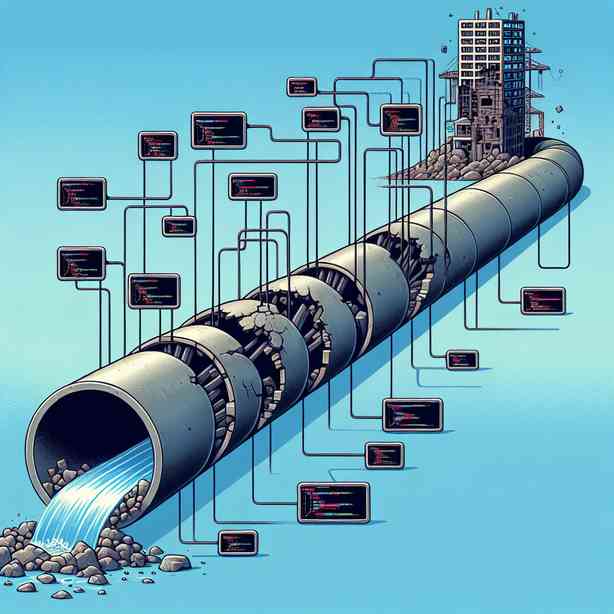

The concept of a build pipeline plays a crucial role in modern software development, specifically in continuous integration and continuous delivery (CI/CD) practices. However, there can be instances where a seemingly flawless build pipeline leads to unexpected results, including the complete downfall of an application. This phenomenon raises important questions about the effectiveness of the tools and processes we utilize in our software development environments. Understanding the intricacies of a build pipeline, the factors that can lead to its failure, and the preventative measures that can be taken is essential for any development team dedicated to delivering high-quality software consistently.

To begin, let us explore what a build pipeline is and its components. A build pipeline is a series of automated processes that allow developers to compile, test, and deploy their code. The build process typically consists of several stages, starting with code compilation, followed by automated testing, and culminating in deployment to a staging or production environment. Each stage of the pipeline is designed to validate the integrity of the code, ensuring that any new changes do not introduce bugs or vulnerabilities.

One of the foundational elements of a build pipeline is version control. Version control systems such as Git enable developers to keep track of changes in their codebase, allowing for collaborative development and management of code versions. When a developer pushes code to the main branch of a repository, this action typically triggers the build pipeline, initiating the processes mentioned earlier. Effective version control is paramount as it not only ensures that developers work on the most up-to-date code but also provides a mechanism for rollback if new changes lead to unforeseen issues.

Automated testing is another critical component of a build pipeline. The implementation of unit tests, integration tests, and end-to-end tests ensures that developers can catch bugs early in the development cycle. However, if the testing suite is poorly designed or does not cover critical paths in the application, it can yield a false sense of security. Consequently, developers may deploy code that introduces significant issues into the production environment.

Furthermore, the configuration of the build environment itself can be a source of problems. If the build and deployment environments differ significantly from one another, discrepancies can arise that alter the application’s behavior post-deployment. This inconsistency is often termed “it works on my machine” syndrome, where code that runs flawlessly in a development setting fails to do so in production. To mitigate this risk, it is essential to create identical environments for testing and production, which is where containerization technologies like Docker prove to be invaluable.

Deployment strategies also play a crucial role in ensuring a successful build pipeline. More traditional methods may involve directly deploying to a single production environment, while modern practices advocate for strategies such as blue-green deployments or canary releases. These methods allow for more controlled rollouts of new features, reducing the impact of potential failures. If a build pipeline is setup to deploy new code to production without a robust deployment strategy, it can lead to application downtime or degraded performance when exposed to real user traffic.

Managing dependencies is another area that bears significant importance in a build pipeline’s success. In modern applications, developers often rely on third-party libraries and frameworks. If these dependencies are not managed properly – for example, if critical updates or security patches are ignored – it can create vulnerabilities in the application. Additionally, version mismatches between libraries can lead to runtime errors that might not surface until they’re under load in production. Therefore, tracking and updating dependencies as part of the build pipeline is essential for maintaining application integrity.

If all of these factors are duly considered, why, then, do outages still occur? One large contributing factor is the human element. Developers and operations teams are often under pressure to deliver fast, leading to hasty decisions regarding code changes and deployments. When a sense of urgency overrides thorough documentation and checks, mistakes can occur. Additionally, communication breakdowns between development and operations teams can result in mismanaged expectations and release readiness.

This brings us to the aspect of monitoring and feedback loops in a build pipeline. A well-designed build pipeline will include mechanisms for monitoring application performance and gathering metrics after deployment. Tools that can provide real-time indicators of the health of an application or insights into user behavior are invaluable. Without effective monitoring, teams may remain oblivious to issues until they result in significant user impact.

To avoid a catastrophic failure, teams should invest in fostering a culture of continuous improvement. Conducting post-mortems and retrospectives after incidents can uncover what went wrong and provide insights into how processes can be improved going forward. These discussions should not be punitive; rather, they should focus on identifying systemic issues and empowering team members to suggest solutions. Emphasizing a learning mindset can prove to be an organization’s greatest asset in navigating the complexities of modern software development.

Building a resilient pipeline also requires the right set of tools. Many organizations utilize CI/CD platforms that provide structured workflows for automating the build process. However, the effectiveness of these tools depends heavily on how well they are configured and integrated into the development life cycle. Choosing tools that align with your team’s workflow while offering robust community support can significantly impact the overall reliability and success of build pipelines.

Security is another essential layer that needs to be woven into every aspect of the build pipeline. With the rising prominence of software supply chain attacks, the need for implementing security practices in continuous pipelines cannot be overstated. Integrating automated security checks and utilizing tools that scan for vulnerabilities in dependencies should become a standard practice within any pipeline.

In conclusion, the build pipeline is a powerful mechanism that has the potential to streamline software development and enhance product delivery. Yet, it is not immune to failure, and the ramifications of that failure can be severe. Understanding the multitude of factors that contribute to the success or downfall of a build pipeline empowers development teams to implement better practices, maintain effective monitoring, and foster a culture of resilience and continuous learning. As we embrace the complexities of modern software development, let us leverage these insights to build stronger, more dependable applications that stand the test of time.